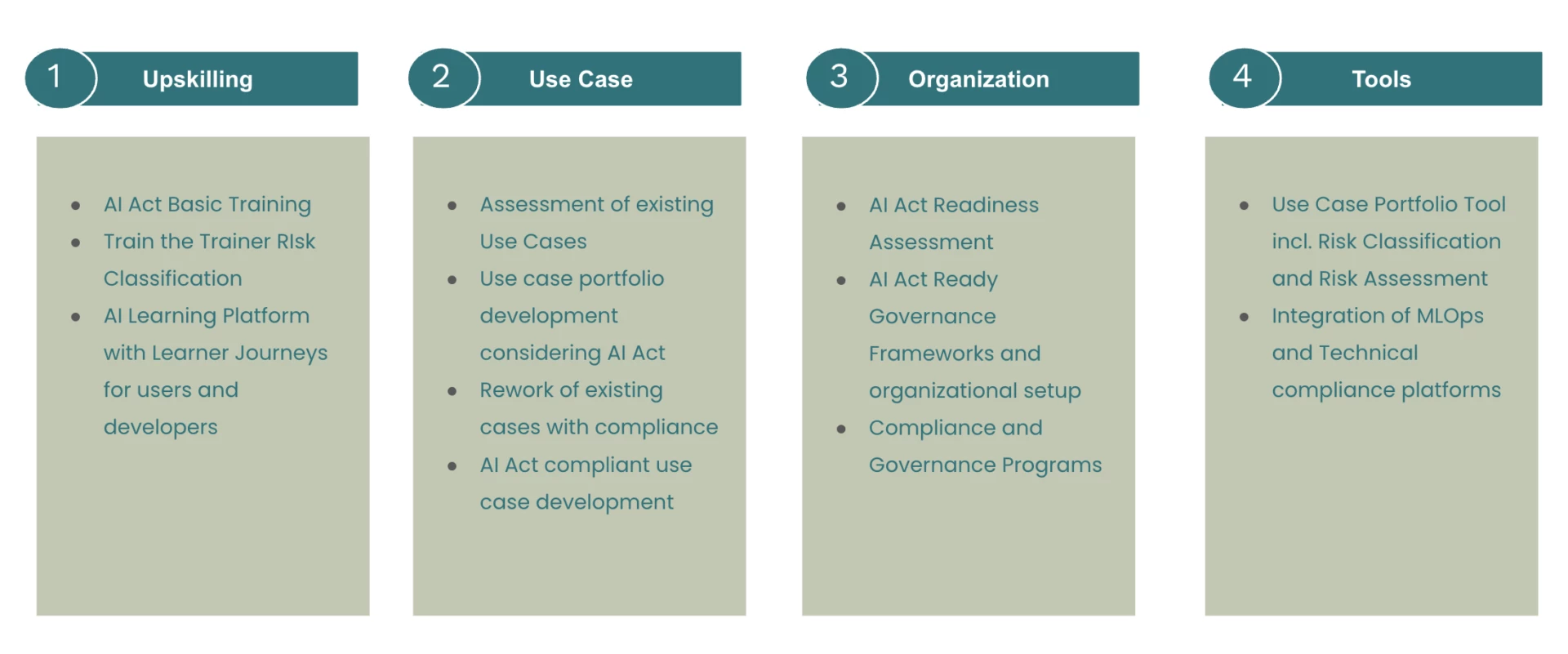

The AI Act demands that companies address AI governance to comply with its regulations at both the organizational and use-case levels.

As a result, companies need to find answers to a variety of questions:

- Which of our use cases fall into the high risk category and how to get our AI use cases compliant?

- How to make sure that the company achieves compliance on an organizational level as quick and cost-efficient as possible and how to maintain it?

- How to upskill my AI experts and users with the new requirements?

- How to upskill my AI experts and employees with the new requirements?

- How to deal with / translate AI Act requirements to other regulations across the world?