Technical Insights Series - Evaluating Machine Learning Platforms

"It was remarkable how appliedAI managed this project with two partners from different industries. In a very agile process, they were flexible and still kept the overall goal in mind: to give us hands-on experience with different ML pipelines and to deliver a perfect documentation and overview." Dr. Thomas Schröck, Wacker Chemie AG

Reproducing, deploying, versioning, and tracking machine learning (ML) pipelines is a central part of the ML lifecycle and one of the most common challenges for newly formed ML teams. The way of working in academia and industry is evolving further apart due to increasing requirements for production-ready systems. Consequently, the corresponding processes and workflows are also maturing.

Traditional software engineering tools such as issue trackers, version control, and collaboration tools do not adequately reflect the added complexity of the ML lifecycle. The same is true for data science tools such as notebooks or libraries for model training. This gap is particularly pronounced for functions designed to manage data and models. For machine learning pipelines, these are central.

As a result, a variety of open source and commercial vendors promise to make ML pipeline management easier. Because the landscape is immature, no de facto standard toolchain has yet established itself in the market. Thus, enterprises seeking to make strategic decisions about their toolchain face an immediate problem.

Project Background

appliedAI was approached by its partners Wacker and Infineon about this problem. Both are large German companies that have machine learning teams.

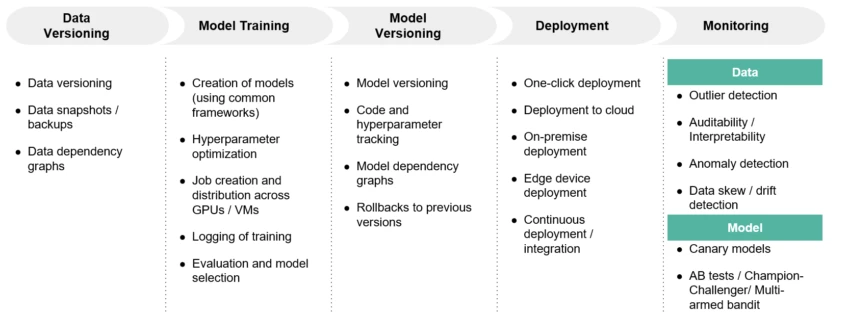

Together with these partners, appliedAI evaluated end-to-end and modular tools to manage ML pipelines. In this project, appliedAI was particularly interested in tools that facilitate data versioning, model training, model versioning, model deployment, and data and model monitoring. To stay within the scope of the ML platform evaluation, the evaluation did not address tools for wrangling, storing or processing data.

Infineon Technologies AG is a German manufacturer of semiconductors headquartered in Neubiberg, Germany. Infineon joined appliedAI's partner network in 2017. The company has over 40,000 employees and is one of the ten largest semiconductor manufacturers in the world. It is a market leader in automotive and power semiconductors. In fiscal 2019, the company generated sales of EUR 8.0 billion.

Wacker Chemie AG is a German multinational chemical company based in Munich. Wacker joined appliedAI's partner network in 2017. The company employs over 14,000 people and operates 24 production sites in Europe, Asia and the Americas.

We asked ourselves the following questions: How can we evaluate an ML pipeline? What steps do we need to go through? And what critical aspects do we need to consider when developing the ML pipeline? In the following article, you can read about the ML pipeline evaluation process for Wacker and Infineon, the issues the companies faced in deploying machine learning, and the approach appliedAI took to build and evaluate the pipelines.

Initial situation and problem statement

Wacker and Infineon have both successfully carried out their first machine learning (ML) projects, improving the work of various business areas.

Both Wacker and Infineon had their own ML teams and were increasingly expanding the scope of their work. However, they quickly identified several flaws in their workflow, including having to manually track and deploy models.

Organizing many different data and deployment pipelines was another challenge. Providing nodes for training ML models was extremely time-consuming. Additionally, reproducibility is an important criterion in both companies' industries.

Infineon and Wacker wanted to expand their ML teams and therefore needed a solution to these problems. They approached appliedAI with the intention of evaluating different types of ML pipelines in a joint project.

Approach and methodology

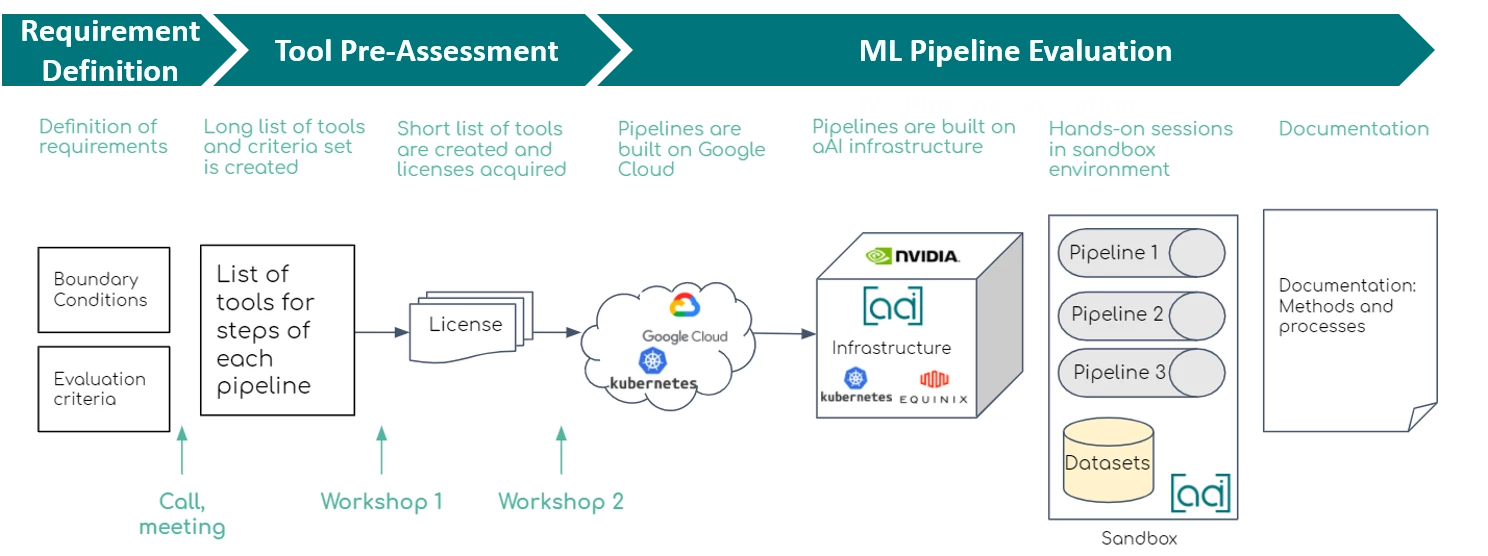

Infineon and Wacker aimed to approach ML projects in a structured manner, overcome disorganization, and reduce the risk of legal consequences. To aid in achieving this goal, appliedAI evaluated various ML platforms. The project followed a three-stage process.

First, appliedAI defined the requirements that were most important for the partners. Next, the team created a list of tools for each step of the pipeline and examined their applicability to the basic requirements.

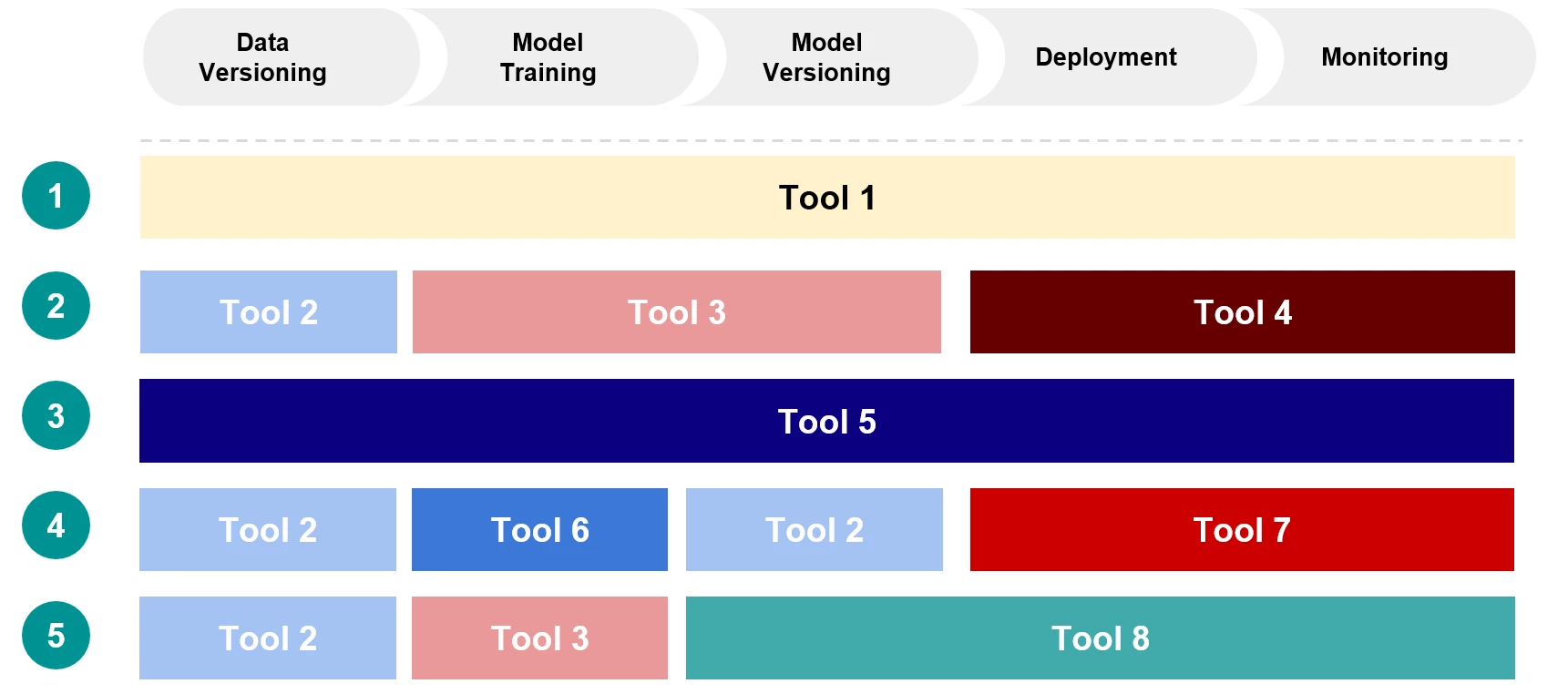

In the second phase - preliminary evaluation of the tools - we created a shortlist of eight tools, which formed the basis for the five ML pipelines. The appliedAI team then contacted the corresponding tool providers to obtain support, assistance, and test licenses for the selected tools. After accessing all the tools, appliedAI provided them on the infrastructure of the initiative and in the Google Cloud.

All five ML pipelines were then evaluated based on the criteria defined in phase 1. In the final step, we created the necessary documentation on processes and pipelines, which served as the basis for evaluation. We describe a more detailed insight into the phases below.

Process of Machine Learning Pipeline Evaluation

Phase 1: Definition of Requirements

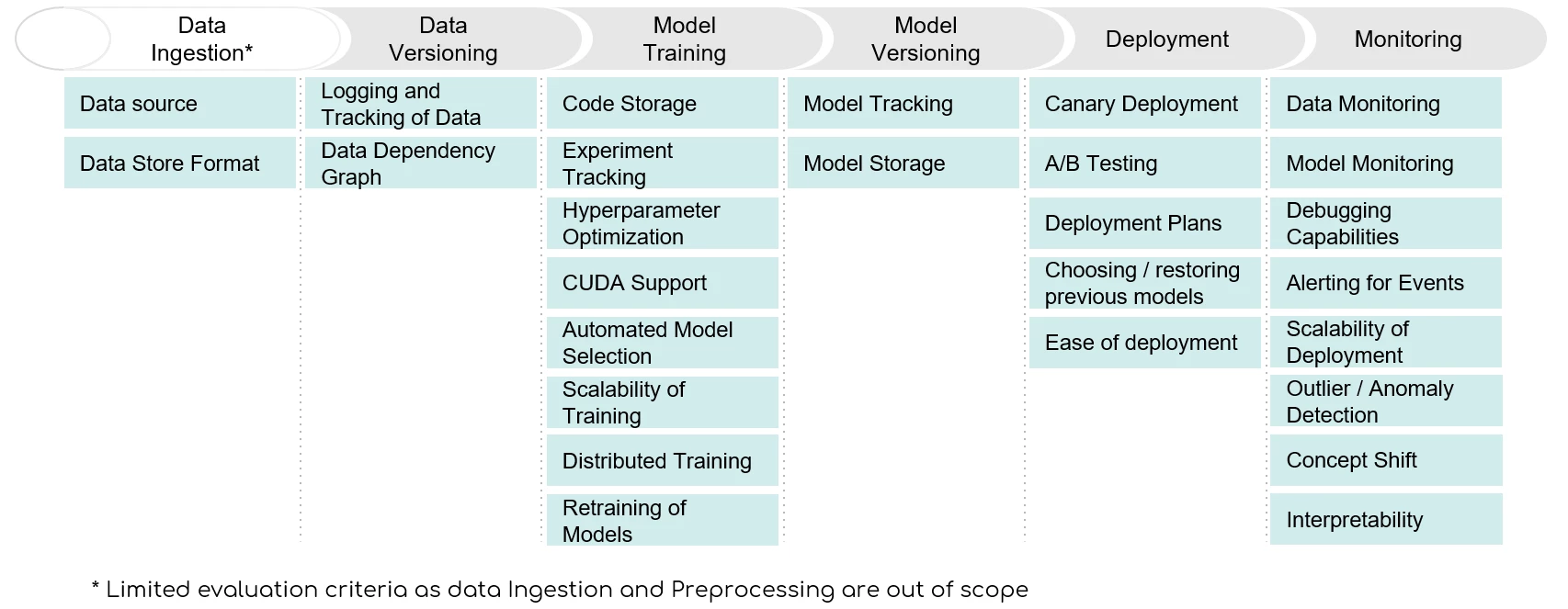

At the outset, we defined the scope, requirements, and multiple evaluation criteria to assess the needs of the partner companies. Within this definition, appliedAI established five crucial stages for evaluating the ML pipeline: data versioning, model training, model versioning, model deployment, and data and model monitoring.

Furthermore, we identified various approaches to designing a pipeline - from modular tools to end-to-end approaches. We also defined multiple criteria in a multi-stage approach.

Based on these, we directed our evaluation towards the pre-defined needs of Wacker and Infineon.

- First, appliedAI created a questionnaire for the teams of the two partner companies with specific requirements and their relative importance. We then discussed the established criteria of the two teams in the group and reduced them to the most important ones.

- Second, appliedAI and the ML teams categorized the criteria along specific dimensions, e.g., whether they were dealbreakers and thus non-negotiable, or whether they were criteria that should be evaluated during the tool evaluation. Thus, different criteria were used in different phases of the project. For example, we used certain criteria in Phase 2 to filter out tools from the longlist to create the shortlist. In addition, we divided the criteria into the five pipeline stages mentioned above.

- Third, appliedAI created a preliminary weighting for all criteria, estimating their relative importance. Wacker and Infineon were able to interactively adjust this weighting.

Phase 2: Preliminary Evaluation of Tools

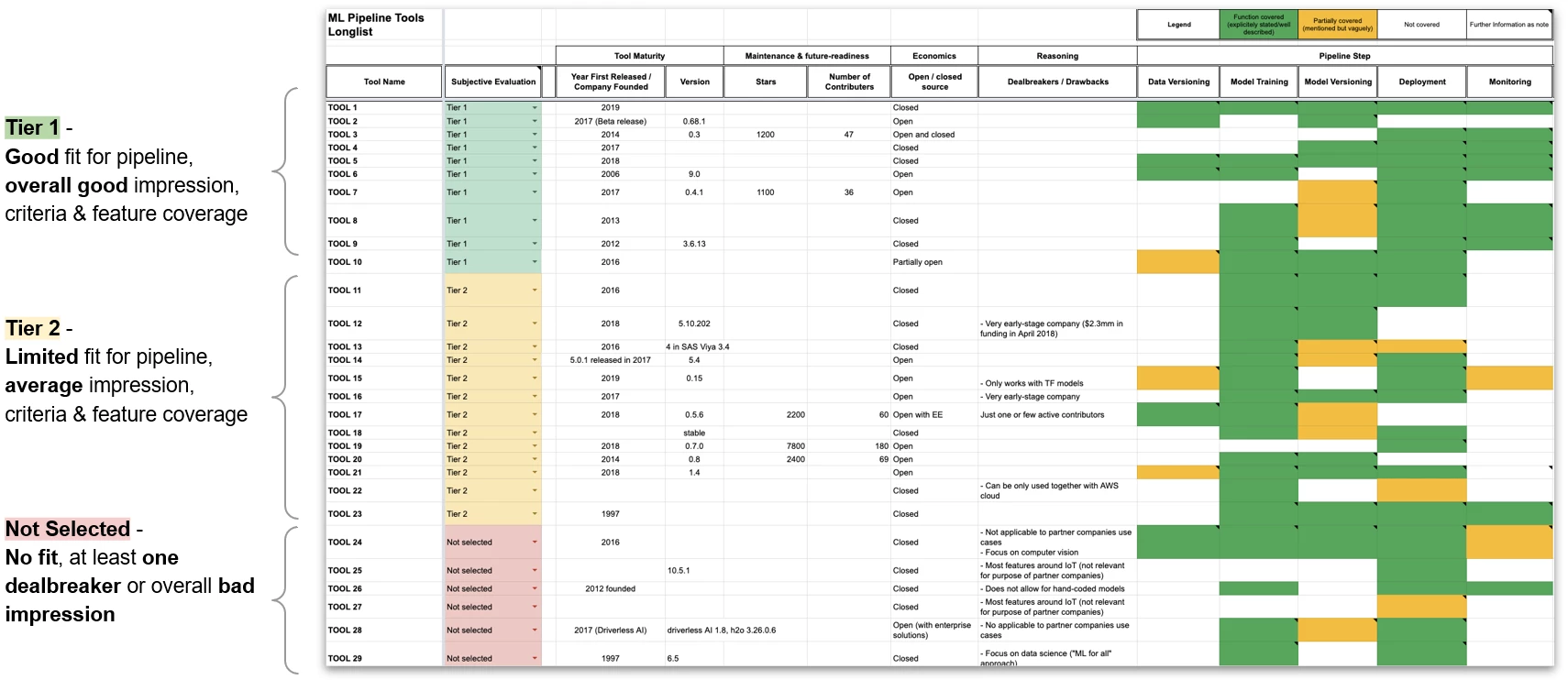

We collected this information based on publicly available information. We then categorized the tools listed in the longlist into Tier 1, Tier 2, and non-selected tools.

- Tools in Tier 1 were considered well-suited for the pipeline. They provided a good overall impression, functionality, and good criteria.

- Tools in Tier 2 were only partially suitable for the pipeline and offered an average overall impression.

- Tools in the non-selected group contained at least one dealbreaker and offered a poor overall impression. Hence, they were not suitable.

Phase 3: Evaluation of ML pipelines

In the next step, appliedAI provided the tools partly on the Google Cloud Kubernetes Engine and partly on the in-house Kubernetes cluster of appliedAI. This process was supported by a cross-functional project team with ML and DevOps experts.

Subsequently, appliedAI evaluated the five ML tools or integrated pipelines from the shortlist. For this purpose, the team compiled a set of questions that should be answered when evaluating the pipeline. The criteria were considered at the tool and pipeline level to obtain a holistic view of the pipelines used.

Additionally, the teams from Wacker and Infineon were able to access the tools on the infrastructure of appliedAI. Thus, they were able to try out the tools themselves and evaluate them independently. Throughout the process, appliedAI supported the teams from Wacker and Infineon.

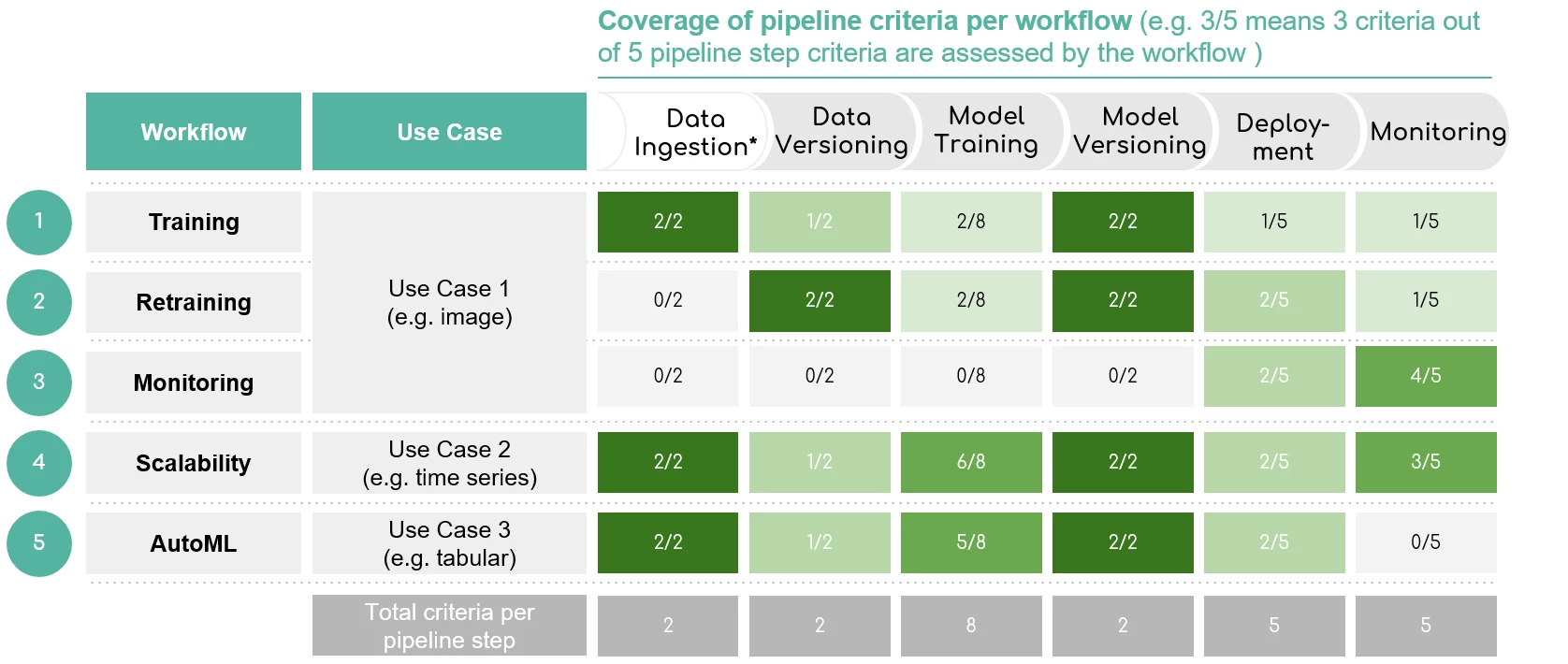

The catalog of questions supplemented the catalog of criteria to be evaluated. The diverse capabilities of ML pipelines were then tested using five compiled ML workflows. The five workflows evaluated were training, storage, monitoring, scalability, and AutoML.

In the final step, we discussed the findings and results of the evaluation in the joint project team with Wacker and Infineon. During the entire project, the project team, consisting of experts from appliedAI, Wacker and Infineon, met regularly for project meetings. In these meetings, concrete feedback was given on work steps in the sense of an agile process, and changes were then incorporated in a consistent, goal-oriented and flexible manner - in line with the results of the discussions and the requirements.

Results and insights

"appliedAI did a great job managing this project with multiple clients. They led us through the different project phases in an agile way, always open-minded but never losing focus. We gained practical experience and could benefit from the profound technical expertise of the appliedAI project team. Based on this and the jointly developed blueprint, we feel confident to pursue this topic further." C. Ortmaier, Infineon Technologies AG

Three insights stood out at the end of the project.

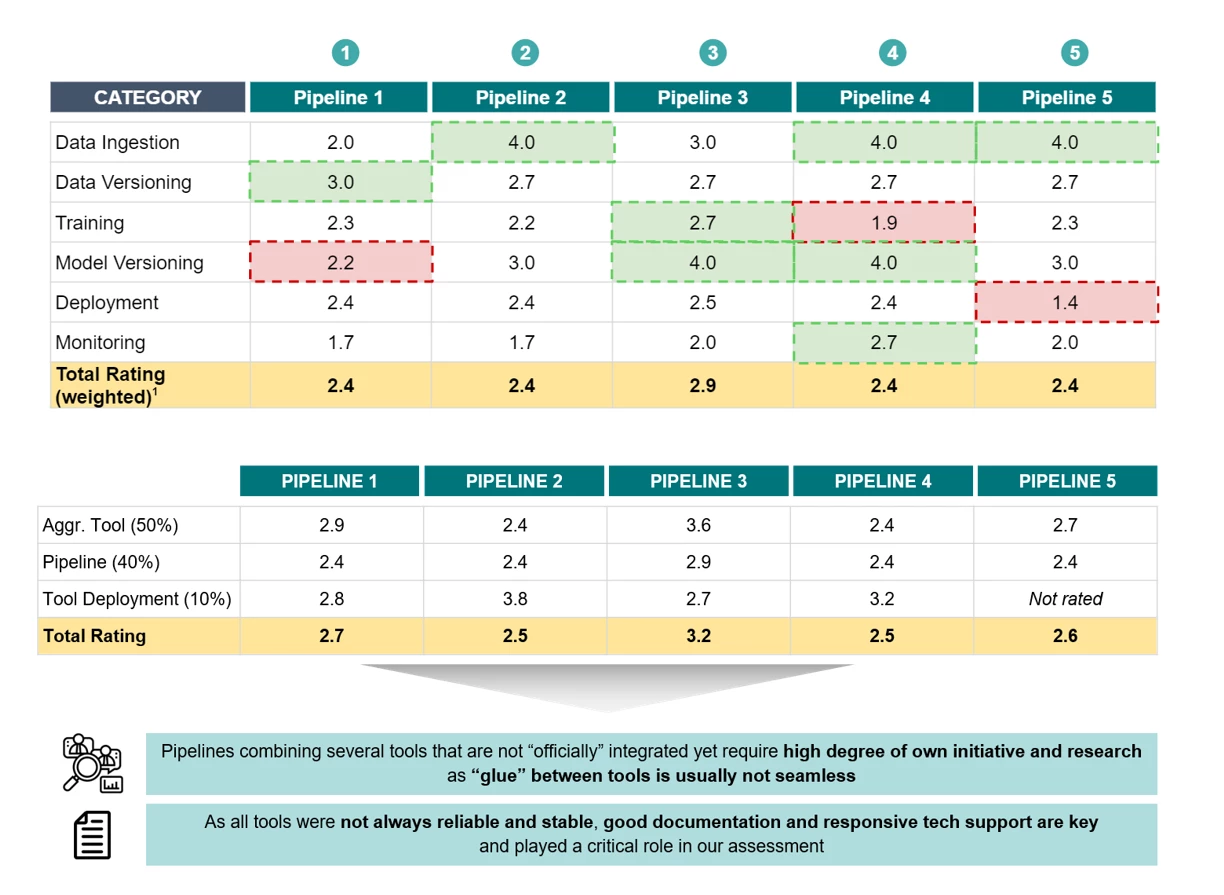

- First, tools with a single user interface and end-to-end solutions often have significant advantages. Many modular tools relied on complex configuration and had hidden dependencies and version conflicts, which resulted in a high overall setup effort. On the other hand, modular tools sometimes offered features that were not yet integrated into end-to-end solutions.

- Second, currently available tools are difficult to integrate and lag behind toolchains in other software engineering areas. appliedAI predicts huge potential for ML tooling start-ups, as the market is not yet fully tapped.

- Third, many of the tools showed serious operational problems and were thus less stable. This highlights the early stage that ML tooling is currently in.

In contrast to the individual tools, the rating for the respective pipelines is much closer together. Weaknesses in one category are balanced out by strengths in others. There is no outstanding "all-rounder".

By the end of the project, the Infineon and Wacker teams had deep insights into the current tool landscape and understood the pitfalls and challenges of building machine learning pipelines. Likewise, they now knew the unique selling points of the various toolchains.

In retrospect, appliedAI achieved a high level of customer satisfaction with this ML pipeline creation project. The reason for this was the extensive experience with the tools as well as the efficient teamwork and collaboration. In addition, appliedAI provided a practical approach to the tools and a smooth transition from face-to-face to online meetings.

Authors of the case study and ML pipeline project team.

- Dr. Denise Vandeweijer, Director AI Solutions & Projects

- Stephanie Eschmann, Senior Product Marketing Manager bei appliedAI Initiative

- Sebastian Wagner, Senior AI Engineer

- Moritz Münker, Software Engineer- AI

- Alexander Machado, Team Lead MLOps Processes bei appliedAI Initiative

- Adrian Rumpold, Head of MLOps Engineering bei appliedAI Initiative

- Anica Oesterle, AI Engineer & Product Lead

- Patrick Tu, Junior AI Engineer

In partnership with: Infineon Technologies AG and Wacker Chemie AG

Do you have any questions about the partnership? Talk to us

Unfortunately, we cannot display the form without your consent. We use Hubspot forms that set functional cookies. Please accept functional cookies in the settings to be able to use the contact form. Or write us an email: info@appliedai.de.