Our motivation for this landscape

This article is intended to provide a quick introduction for anyone interested in the ethical debate about Artificial Intelligence (AI) and to facilitate further research. It gives a broad overview of the status quo of the application-oriented debate about “responsible” or “ethical” use of AI for the end of 2018. We shed some light on the major international AI ethics practices and the dominating actors in public debates as well as their measures or principles that are being developed and implemented.

We don't take a stand on what the "right" or "wrong" approach is but give you the data to build your own conclusion. Please note that at the end of 2018 this topic is just picking up speed and we did not update it since then, so it does not include e.g. the EU's 2019 whitepaper.

The role of ethics

The discussion about the social consequences of artificial intelligence (AI) is often characterized by two extreme positions: An overwhelmingly negative view, that foresees the potentially complete annihilation of humanity, and an optimistic / enthusiast one that argues we are nearing our entry into a fully digital smart paradise. The direction our society will take is based on debate and reasoning. Ethical discussions are necessary in order to develop alternatives for society as a whole, which are desirable for everyone. The role of ethics is to guide what we “should” do based on societal consent. Technology allows us to shape those alternatives.

To this day, a number of actors have already published guidelines, principles, and frameworks to guide the ethical and responsible use of AI. To get a comprehensive overview of the current approaches, we have gathered and analyzed the ethical declarations and guidelines from a large variety of groups and actors. Our overview considers:

- Large State Actors: The US, China, and the EU

- Nations and State Actors: Denmark, Italy, France, Sweden, Finnland, United Kingdom, Netherlands, Austria, and Germany

- Non-State Actors: Future of Life Institute, FAT / ML, Ethics in Artificial Intelligence, Open AI, Partnership on AI, AI4ALL, Ethics and Governance in Artificial Intelligence, AI Ethics Lab and Stephen A. Schwarzman College of Computing

- Technology Companies: Google, Amazon, Facebook, Apple, and Microsoft.

Stakeholder-agnostic principles: Ethically Aligned Design, Asilomar Principles, Toronto Declaration, and Montreal Declaration are also briefly explained. - Other Declarations: Ethically Aligned Design, Toronto Declaration, and the Montreal Declaration

Leading up to this landscape was a series of noteworthy publications that motivated us to give an overview of the current status. Namely:

- (06/2014) China announces a national social credit system for the valuation of individuals and government institutions.

- (06/2015) The Google “Photos” app identifies African-American people as gorillas due to inadequate AI training.

- (12/2015) Cambridge Analytica uses 50 million records from Facebook users to influence their political opinion in numerous election campaigns through targeted Facebook posts

- (05/2016) In Florida, the first human being dies in an autonomously driving car. At a crossroads, his Tesla could not distinguish a white truck from the open sky and accelerated just before the impact.

- (03/2018)The UN accuses Facebook of promoting violent riots in Myanmar by spreading Hate Speech.

- (06/2018) Amazon shareholders ask Jeff Bezos in an open letter not to provide his “Recognition” software for prosecution.

What is AI-Ethics

The analyzed sources base their views on a set of philosophical ideas and assumptions as well as a few principals by ethics are applied to AI. While we will not focus on the philosophical base, the three basic approaches to apply ethics in AI are important to understand as they appear in most approaches:

- Ethics IN design: Artificial Intelligence is more than “just another” technology. We understand that and help our partners derive conclusions from it. We educate and train them from the technical aspects to the strategic and sociopolitical elements. The best and brightest always stand on the shoulder of giants – help us teach AI to some of the most influential individuals out there.

- Ethics BY design: When it comes to large scale adoption and the technological stack, AI is very much in its infancy. Therefore we are developing new AI prototypes and tools with our partners in a multitude of domains (from purely virtual e.g. data analysis, NLP, etc. to cyber-physical e.g. drones and CV in the real world, etc.). We base these developments on state-of-the-art technology of our partners (NVIDIA, Google, Cisco, IBM, Pure etc.) and are tackling everything in between – be a part of our engineering team.

- Ethics FOR design: Artificial Intelligence is poised to change the business model of major companies and may disrupt whole industries. Understanding the chances, risks and values that can be achieved by using this technology is a complex task for companies and its management. We know that and help them with original content to takle this challenge – be a part of our strategy team.

It is the nature of ethics that they describe what an entity considers “correct” and “ethical” behavior. Therefore most approaches, be it from a state actor or from a company include three basic elements: A common, qualitative goal that shall be achieved, a specific set of activities and a set of expected or partially delivered results. While this structure is often the same the implementation of these three steps varies greatly. While some rely on small, top-down defined committees, others expect the broad but slow discussion and consensus. The following graphic marks the most prominent approaches

Ethics in AI: Evaluation & Law

- The purpose of this questionnaire from the EU Commission is to assess the trustworthiness of AI systems (publication in 2019).

- This questionnaire from the "DataEthics.eu" initiative based in Denmark is used to conduct a "Data Ethics Readiness Test". It helps companies to evaluate their handling of data according to ethical aspects (publication 2021).

- For the ethical evaluation of AI systems in public administration, the Berlin-based non-profit organization AlgorithmWatch has developed two practical checklists.

- The EU Commission's proposal for the regulation of AI, published in April 2021, is based on the work of the AI High-Level Working Group consisting of AI experts from all over Europe and the results of a public consolidation process.

- The "Standardization Roadmap Artificial Intelligence" is part of the German government's AI strategy and was published by DIN at the end of 2020. It describes a model for the risk assessment of AI systems (page 14) and contains an overview of existing standards in the field of AI in Chapter 6 (page 153).

- The abridged version of the expert opinion of the Data Ethics Commission (2019) summarizes basic ethical requirements for the collection and processing of personal data. The document addresses legal frameworks and the role of data for AI systems.

- The federal government's Open Data Strategy, published in July 2021, contains concrete recommendations for action by the federal administration.

- With a view to the coming legislative period, the KI-Bundesverband e.V. published an 8-point plan for policymakers in May 2021.

- Researchers at ETH Zurich compare 84 AI ethics guidelines from different countries and describe similarities and differences in content (as of 2019).

- This scientific publication from mid-2021 ("Emerging Consensus on 'Ethical AI': Human Rights Critique of Stakeholder Guidelines") examines 15 "strong" AI ethics guidelines from different regions with a view to similarities and gaps, also in the context of existing law.

- An overview of national AI policies is in Chapter 5 (page 83 pp.) in the European Parliament's report "The ethics of artificial intelligence: issues and initiatives" (as of 2020).

- This June 25, 2021 video interview discusses the different regulatory approaches in Europe and the United States.

Details about the landscape

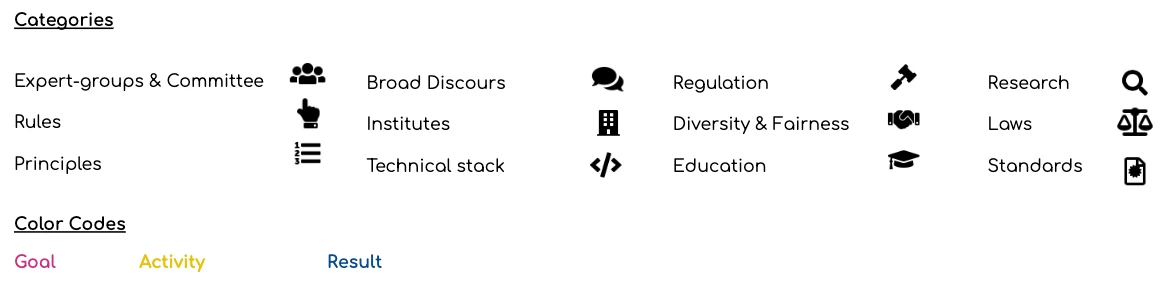

The landscape relies on the following color and symbol coding.

The data comes from a set of sources:

State actors

- Denmark: Published: January 2018

Sources: Strategy for Denmark’s Digital Growth

URL: https://eng.em.dk/media/10566/digital-growth-strategy-report_uk_web-2.pdf

Summary: Denmark describes itself as fundamentally good at working responsibly. Accordingly, the statements on ethical procedures in the AI are quite brief. The explicit passage comprises a few lines and mentions general measures such as the establishment of data ethics – possibly including a data ethics code – and discussions on the topic in internationally relevant forums. Total expenditure of DKK 5 million (≈ EUR 670,000) is planned for 2020. - Italy: Published: March 2018

Sources: Artificial Intelligence. At the Service for Citizens

URL: https://ia.italia.it/en/

Goal: Ensuring that AI should always have the priority to serve the citizens

Summary: Italy has one of the most comprehensive concepts for ethics in AI among the EU states and intends to use it specifically to align all AI strategies: Italy wants to start with a conscious reflection on how we want the world to be in the future. They refer to the virtuous ethics of Aristotle, but at the same time emphasize that an agreement on the improvement of human well-being is also possible with followers of other ethics such as Kant’s ethics, Confucian ethics, Shinto or Ubuntu. It is important to reaffirm the “anthropocentric principle” according to which AI should always serve the citizen first and foremost. In principle, the ethical application of AI in public administration seems to be a central point of reference for Italian considerations.

Ethics in AI is challenge No. 1 in Italy’s AI strategy. They demand the formulation of general principles of justice as a basis for freedom as well as individual and collective law and propose four “elements” for public debate and scientific analysis. One concrete measure described is the establishment of a Trans-disciplinary Centre on AI, with the following objectives: To promote a debate on the evolution of ethics, to support critical reflection on emerging ethical concerns and to facilitate the involvement of experts and citizens in deriving regulations, standards and technical solutions based on technical and social aspects. - France: Published: March 2018

Sources: AI for Humanity

URL: https://www.aiforhumanity.fr/en/

Summary: France’s AI strategy is based on the report by mathematician and politician Cédric Villani. In the section “Ethical Considerations of AI Recent”, for example, he proposes a kind of special task force of certified experts who are commissioned to audit and test algorithms for constitutional issues. For the training of AI experts, he recommends Ethics by Design as an obligatory component in order to raise awareness of ethical issues right from the start. He also recommends a “digital technology and AI ethics committee” to lead a transparent public debate. Despite all the urgent questions, this debate should give rise to a long-term perspective.

President Macron follows these and other recommendations. His official strategy includes the idea of an international group of experts similar to the Intergovernmental Panel on Climate Change (IPCC). The group should develop independent findings on transparency and fairness and incorporate them into educational programmes. Furthermore, research on more comprehensible AI is needed: a more explainable model, more interpretable interfaces and a better overall understanding of the underlying mechanisms. He would like to offer AI developers ethics training and a discrimination impact assessment for their algorithms. For the public sector, he plans the clear regulation of prediction algorithms in law enforcement and an intensive international debate on any development from LAWS to an observatory to curb their proliferation. As further conditions of ethical AI he names diversity and inclusion. Specifically, he wants to raise the proportion of women in digital engineering courses to 40% by 2020. In addition, he would like to draw trainers’ attention to the importance of diversity through guidelines and training. The government should promote the use of AI in social innovation programmes so that workers in the social sector also benefit from the technology. - Sweden: Published: May 2018

Sources: National approach to artificial intelligence

URL: https://www.regeringen.se/4aa638/contentassets/a6488ccebc6f418e9ada18bae40bb71f/national-approach-to-artificial-intelligence.pdf

Goal: Help to create sustainable AI

Summary: Sweden names “sustainable” AI as its overarching motto and stresses that ethics, protection and safety must not be a downstream consideration, but an integral part of early stages of AI development. Furthermore, the development of interdisciplinary AI knowledge for engineers and decision-makers is desired and transparent and understandable algorithms are required for the development of rules, standards, norms and ethical principles. However, the ethical efforts must remain in balance with the urgent need for data in order to realize the social benefits of AI. - Finland: Published: May 2018

Sources: Work in the age of artificial intelligence Publications of the Ministry of Economic Affairs and Employment: Four perspectives on the economy, employment, skills and ethics

URL: http://julkaisut.valtioneuvosto.fi/bitstream/handle/10024/160980/TEMjul_21_2018_Work_in_the_age.pdf

Goal: Use technology for valuable social objectives

Summary: Finland is committed to using the “ambitious goal” to use technology to achieve valuable social goals, with a strong focus on work design. A social vision is a prerequisite for the government to influence the development and application of AI in a meaningful way. They criticize that at this point in time none of the numerous international reports contains a strategy or action plan for achieving the often desired good artificial intelligence societies. For such a society they propose the values “transparency”, “responsibility” and “extensive societal benefits”. They emphasize that the legal and moral responsibility of algorithmic applications must clearly remain with the human being.

If the future developments lead to job losses, support for the people affected is necessary, because after all they have paid for the progress with their taxes. Society should benefit equally from the advantages of AI. Mechanisms for sharing these benefits as well as income transfers are mentioned for this purpose. Developments in the world of work should be discussed at the level of the society as a whole, but also at the level of individual work organisations.

For individual companies, the ethical handling of AI would increasingly influence the brand value. The large companies have already understood this, but further research from the perspective of technology, business management and political science is still necessary. This should be expanded into international cooperation, whereby Finland would also like to take on a pioneering role in the implementation of the AI strategies of the EU.

The success of the strategy presupposes a strong education of the population on the topic of AI. Finland has presented a unique programme to increase understanding, diversity and inclusion in AI: With an AI Challenge since May 2017, Finland has supported a total of around 55,000 citizens (1% of society) to develop AI solutions themselves for their personal tasks.

Other planned measures include the establishment of a parliamentary monitoring group to monitor technological progress, the formulation of a value base geared to the common good (with a centre of excellence specifically for the B2B sector) and the regulation of online platforms based on the example of the European Data Protection Regulation (GDPR). All in all, despite the hardly foreseeable developments in the field of AI, experiments should continue and experience should be gained. In doing so, one could rely on the support of state institutions in critical situations. - UK: Published: June 2018

Sources: Data Ethics Framework

URL: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/737137/Data_Ethics_Framework.pdf

Goal: Become the world leader in ethical AI

Summary: The United Kingdom unequivocally formulates its claim to be the “world leader in ethical AI”. The focus is on the ethical handling of confidential data to facilitate its availability and exchange. Central instruments for securing ethical AI include Data Trusts and a Centre for Data Ethics and Innovation (CDEI). The latter already seems to be the official information point for the concrete ethical approach of the United Kingdom. It is intended to act as a long-term interface between government, business and civil society. Specifically, it aims to assist the government with legislation, work with research institutions and think tanks to identify national challenges and global opportunities, and provide leading companies with a knowledge base and exchange platform for best practices.

The CDEI’s statements on its basic ideas on ethical AI are broadly in line with the general discourse: to increase diversity among developers and to ensure fairness, transparency, liability and accountability. The following fields of action receive comparatively more attention: Targeting of citizens by advertisers, frameworks for the exchange of data, intellectual capital and property rights for innovations generated by AI. - Netherlands: Published: October 2018

Sources: AI voor Nederland. Vergroten Versnelen en Verbinden

URL: https://order.perssupport.nl/file/pressrelease/a253ded8-1863-4d07-b79d-72639baefc51/6be5dcdc-b9d8-4675-bd80-9425826c840c/AIvNL20181102final.pdf

Summary: The public-private partnership AINED, together with the Boston Consulting Group (BCG), has published a first report for the Netherlands. The report merely contains the reference to continuously gather experience with AI and its social consequences and then formulate regulations for the technology. Observers see an urgent need for action for the Netherlands not to move further away from its formerly good competitive position. AINED and BCG have announced a national strategy in the coming months. - Austria: Published: November 2018

Sources: Die Zukunft Österreichs mit Robotik und Künstlicher Intelligenz positiv gestalten. White Paper des Österreichischen Rats für Robotik und Künstliche Intelligenz

URL: https://www.acrai.at/images/download/ACRAI_whitebook_online_2018.pdf

Goal: Legal and moral framework for shift of decision-making authority to AI systems

Summary: Austria is the only country examined that addresses robotics in its ethical approach to AI on an equal footing. It is interesting to note that the moral status of robots and AIs is “currently unclear”. Most other authors of ethical procedures would probably currently contradict this and exclude any moral status. Austria is also the only EU state to categorically ban LAWS. Apart from that, the other statements seem to be in line with the general discourse.

In its ethical approach, Austria refers from the outset to the values of the European Human Rights Charter. These include, for example, justice, fairness, diversity and inclusion. A prerequisite for this is the transparency and security of the technology to be achieved. Currently, people always have to make and take responsibility for every ethical decision with regard to AI. A shift of decision-making authority to the AI system requires a clear legal and moral framework. In addition, “computational precautions for ethical behaviour” should be directly integrated into machine architecture and design (ethics by design). In order to align all further decisions with the common good of the population, Austria also plans to hold extensive expert consultations and public discussions. - Germany: Published: November 2018

Sources: Strategie Künstliche Intelligenz der Bundesregierung

URL: https://www.bmbf.de/files/Nationale_KI-Strategie.pdf

Goal: Use ethics guidelines to contribute to trademark “AI made in Europe”

Summary: The German AI Strategy contains the most concrete measures and budget data of the compared states. The description of the ethical approach, on the other hand, corresponds approximately to the European average in its degree of abstraction.

In principle, the regulatory framework is already very stable due to German legislation and the GDPR, but gaps in algorithm- and AI-based decisions, services and products would be examined. As with many other ethical procedures, transparency, traceability and verifiability of the algorithms are required as protection against bias and manipulation. It will be examined whether government agencies should also be established or expanded to safeguard these features. In any case, auditing standards and standards for impact assessment are planned. Technological possibilities for the enforcement of law and ethical principles are also to be explored.

A focus will be on the digital education of citizens to empower and self-determine individuals in relation to AI. Ethics issues and sociological correlations are to become part of teaching, training and further education. Information campaigns are to be launched to promote the digital and media competence of citizens and to enable and motivate critical reflection on the basis of realistic application scenarios. The government emphasises the need to educate society and to continuously discuss ethical frameworks with equal involvement of the technical and natural sciences as well as the humanities and social sciences. For this purpose, forums and debates are to be made available. The Federal Government itself describes its approach as “ethics by, in and for design” and would thus like to make a contribution to the trademark of “AI made in Europe”.

Large state actors

- China: Published: July 2017

Sources: New Generation Artificial Intelligence Development Plan

URL: https://chinacopyrightandmedia.wordpress.com/2017/07/20/a-next-generation-artificial-intelligence-development-plan/

Summary: China is often presented as a negative ethical example due to the Social Credit System announced for 2020. But even there, the ethical debate is intensifying based on the topic of moral agents. At least with regard to the quantity and concreteness of information on ethical procedures, the AI development plan presented in 2017 is absolutely comparable with European strategies. As with all other strategies, ethical norms, laws and regulations are sought. Their continuous optimisation through research activities is planned until 2030. In addition, an ethical framework for human-computer collaborations and an ethical code of conduct for research and development on AI products are to be developed. Potential risks of AI should be made assessable and there should be solutions for emergency scenarios. In industry and business, China is focusing on promoting self-discipline and strengthening manager morale. The latter was not formulated in such a way in any other ethics approach examined. - USA: Published: December 2017

Sources: FUTURE of Artificial Intelligence Act

URL: https://www.congress.gov/bill/115th-congress/house-bill/4625/text

Goal: Become the international leader in military ethics and AI security

Summary: In the USA, state responsibility for AI currently lies primarily with the Pentagon. In January 2019, the United States began developing ethical principles for the military use of AI. The FUTURE of Artificial Intelligence Act, the central document of the state’s AI strategy to date, recommends ethics training for AI developers, but also recommends examining whether (!) and how ethical standards can be integrated into the development and application of AI. In February 2019, the Pentagon published the Summary of the 2018 Department of Defense Artificial Intelligence Strategy. Harnessing AI to Advance our Security and Prosperity. The goal is to become the international leader in military ethics and AI security. Stated measures are consultations by experts, investment in research and development and the provision of the results to create international standards for the use of AI in the military field. The US government’s commitment in the field of AI ethics, which still appears to be low in the public perception, is contrasted by a large number of non-governmental institutions with international reach. - EU: Published: April 2019

Sources: Ethics Guidelines for Trustworthy AI

URL: https://ec.europa.eu/futurium/en/ai-alliance-consultation

Goal: Development of a holistic framework for trustworthy AI systems

Summary: The guidelines of the European Commission, prepared by the High Level Expert Group (HLEG), on ethics in AI were published in April 2019. According to the report, a “trustworthy” AI should contain three components over its entire life cycle: it should be lawful, ethical and robust. The report particularly emphasizes the necessary orientation of AI towards people and their needs (“human-centric design“). Only if AI is perceived as “trustworthy”, i.e. as benign and technically robust, would people want to get involved. The EU thus emphasises the necessity of ethics in AI for its dissemination and further development.

The section on ethical AI proposes ethical guidelines to be taken into account in the development process and in the use of artificially intelligent systems. They should be developed according to the principles of respect for human autonomy, prevention of harm, fairness and explicability. In addition, the development and use of AI systems should focus on diversity. This should prevent that unprotected or historically disadvantaged groups, as well as situations with asymmetries regarding power or information, are included in the system with a certain bias. In addition, risks that could potentially arise through AI systems and thus no longer benefit individuals or society should be considered and mitigated with foresight. These ethical principles are translated into concrete requirements for which technical and non-technical implementation methods are presented.

In addition, the EU envisages further concrete points to develop trustworthy AI systems: these include the promotion of research and innovation, training and education, the establishment of expert groups, as well as a transparent communication regarding capabilities and limitations of ethical AI systems.

With its report the HLEG offers the most complete framework for the implementation of ethical AI among the examined ethical procedures. It represents a process of top-down implementation (from ethical intent to concrete implementation in the situation), but which itself was created bottom-up (from the concrete human situation to ethical intent). The EU Commission intends to continue this dialectical and dialogical process on an ongoing basis. For its part, HLEG proposes to communicate its approach in training courses and educational offers for AI developers and users. In addition, the European Commission plans to play a leading role in the global debate on ethical principles and to support relevant research.

Institutions

- Future of Life Institute: Published: January 2017

Sources: Asilomar Principles

URL: https://futureoflife.org/background/existential-risk/

Goal: Goal of AI research should be to create not undirected intelligence, but beneficial intelligence

Summary: Founded in Cambridge in 2014, the Future of Life Institute is a non-university research institution dedicated to the existentially threatening risks of nuclear wars, biotechnology, climate change and artificial intelligence. With the financial support of Elon Musk, the Institute has supported a total of 47 beneficial AI projects since 2015, with grants ranging from $100,000 to $1.5 million (Future of Life Grants). As part of the 2017 Asilomar Conference, the Institute developed the Asilomar Principles.

The 23 Asilomar Principles are the best known list of ethical principles for beneficial AI. They were formulated in January 2017 at the Asilomar Conference in California by approximately 100 experts from AI, business, industry, philosophy and social sciences. Among the nearly 4,000 signatories to the principles are Elon Musk, Ray Kurzweil and Stephen Hawking. The principles state the importance of safe AI systems, transparency of the algorithms, security of personal privacy, and the overall benefit of AI for all of humanity. - FAT / ML: Published: July 2016

Sources: Principles for Accountable Algorithms and a Social Impact Statement for Algorithms

URL: http://www.fatml.org/resources/principles-for-accountable-algorithms

Goal: Provide AI developers and product managers with concrete ethical orientation

Summary: FAT/ML stands for Fairness, Accountability and Transparency in Machine Learning. The conference, which has been held annually since 2014, is aimed at international AI researchers dealing with informatics methods for ensuring ethical AI (Ethics by design). The Principles for Accountable Algorithms and a Social Impact Statement for Algorithms were formulated in July 2016 and are intended to provide AI developers and product managers with concrete ethical orientation. - Ethics in AI Initiative: Published: 2015

Sources: Ethics in Artificial Intelligence Initiative

URL: https://www.cs.ox.ac.uk/efai/

Goal: How can ethical codes for AI be developed?

Summary: The Ethics in Artificial Intelligence Initiative was founded in 2015 with funds from the Future of Life Grants at Oxford University. The initiative focuses less on the ethical challenges associated with AI than on how ethical codes can and should be developed. A comprehensive overview of ethical codes as well as links and documents on the creation of ethical codes are offered online. - Partnership on AI: Published: September 2016

URL: https://www.partnershiponai.org/

Summary: The Partnership on AI is one of the largest AI initiatives with over 80 partners from different industries. It was founded in September 2016 by Facebook, Amazon and Google, among others. Together with the partners, its goal is to establish best practices for AI and to inform the population about the possibilities and scope of the technology. The partners have committed themselves to eight ethical principles. - AI4ALL: Published: 2017

URL: http://ai-4-all.org/

Summary: AI4ALL is a non-profit education initiative founded at Stanford University in early 2017. Its aim is to increase diversity and inclusion in AI teaching, research, development and legislation through qualification and the establishment of role models. Its educational offerings are aimed specifically at population groups that are underrepresented at the university. - Ethics and Governance of Artificial Intelligence: Published: 2017

URL: https://aiethicsinitiative.org/

Summary: The Harvard-based Ethics and Governance of Artificial Intelligence Initiative has been operating since 2017. It was founded in cooperation between the Berkman Klein Center and the MIT Media Lab with a USD 27 million grant from the Knight Foundation. It researches opportunities for private and public decision-makers to use AI in the public interest. In an open competition, the initiative offers a total of 750,000 USD for projects on four ethical challenges. - AI Ethics Lab: Published: January 2018

URL: http://aiethicslab.com

Summary: The AI Ethics Lab was also founded at Harvard University in January 2018 and offers researchers and practitioners advice, training, workshops and lectures on the ethical implementation of AI. Its mapping workshop helps AI developers identify ethical sources of conflict in their projects and develop solutions without compromising technical integrity. - MIT Stephen A. Schwarzman College of Computing: Published: October 2018

URL: http://news.mit.edu/2018/mit-reshapes-itself-stephen-schwarzman-college-of-computing-1015

Summary: In October 2018, the Massachusetts Institute of Technology (MIT) announced an investment of $1 billion to address global opportunities and challenges through AI. The focus is on the establishment of the Stephen A. Schwarzman College of Computing, named after the CEO of asset manager Blackstone, who personally donated USD 350 million. The promotion of ethics in AI will be an important part of the college’s activities. For example, new curricula are to be developed that combine AI with other disciplines, or forums are to be organized in which leading personalities from politics, business, research and journalism discuss the possible consequences of the latest AI advances and derive the need for action. The work is expected to begin in September 2019.

Tech companies

- Facebook: Published: May 2018

URL: https://www.forbes.com/sites/samshead/2018/05/03/facebook-reportedly-has-a-dedicated-ai-ethics-team/#5690de1d2e5c

Summary: Facebook announced at its F8 developer conference in May 2018 that it has formed its own Ethics Team. This team cooperates with development teams throughout the company and has developed the software Fairness Flow, which can detect bias of another AI. Fairness Flow has been integrated into FBLearner Flow, the software Facebook uses to train its algorithms. Scientific publications will follow. Facebook plans to further minimize the bias of its software by increasing the diversity of its workforce, for example by opening offices far from Silicon Valley. In January 2019, facebook announced that it would finance an ethics chair at the Technical University of Munich for an initial period of EUR 6.6 million over five years to develop ethical guidelines for AI, among other things. - Google: Published: 2018

Source: Responsible AI Practices

URL: https://ai.google/education/responsible-ai-practices

Summary: Google has already established an AI Ethics Board in 2014. Both members and tasks are unknown. In June 2018, Google published its Principles for Ethical AI and shortly thereafter made recommendations for Responsible AI Practices. In December 2018, Google announced a comprehensive set of measures via its blog. This includes training, an AI Ethics Speaker Series, a fairness module in the Machine Learning crash course, an interdisciplinary Responsible Innovation Team, a group of experienced senior consultants, and an advisory board of experienced executives for more complex issues involving multiple products and technologies. - Microsoft: Published: 2017

URL: https://www.microsoft.com/en-us/research/group/fate/

Summary: Microsoft founded the Fairness Accountability Transparency and Ethics group (FATE) in 2017. The group aims to develop informatics methods that are both ethical and innovative and plans to publish about them. In the same year, AI and ethics in engineering and research (AETHER) was added, a company-wide recruited advisory board of experienced executives to identify and address ethical issues at an early stage. Microsoft published its own ethical principles in January 2018 and formulated ethical guidelines for the development of AI chatbots in November of the same year. In December 2018, ethical principles for the use of face recognition were added.

Others

- Ethically aligned design: Published: 2016

Source: Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems

URL: https://standards.ieee.org/industry-connections/ec/ead-v1.html

Summary: The brochure Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems is based on the input of several hundred experts from six continents. The first version was released in 2016. A second version was released in 2017 with a request for feedback from the public. The revised version is to be finalized in 2019. It focuses on five principles that provide an ethical framework for the design, development and application of AI. The brochure is published by the Ethics in Action Initiative of the American Institute of Electrical and Electronics Engineers (IEEE), the world’s largest association of technical professions. Since June 2016, this association has also been working on the P7000 standard series, which develops process models for addressing ethical considerations in the conception and design of IT systems. - Toronto Declaration: Published: May 2018

Source: Toronto Declaration for Human Rights and Artificial Intelligence

URL: https://www.accessnow.org/cms/assets/uploads/2018/08/The-Toronto-Declaration_ENG_08-2018.pdf

Summary: At the RightsCon Conference in May 2018, Amnesty International and AccessNow initiated the Toronto Declaration for Human Rights and Artificial Intelligence. It calls for greater cooperation between the public and private sectors to safeguard human rights in the age of artificial intelligence and recalls, for example, the Vienna Declaration of the UN Human Rights Committee or the International Covenant on Economic, Social and Cultural Rights. Other supporters include Human Rights Watch and the Wikimedia Foundation. - Montreal Declaration: Published: Dezember 2018

Source: Montreal Declaration for Responsible Development of Artificial Intelligence

URL: https://www.montrealdeclaration-responsibleai.com/the-declaration

Summary:

The Montreal Declaration for Responsible Development of Artificial Intelligence was developed by the Université de Montréal together with the Fonds de recherche du Québec. It was presented to the Society for Arts and Technology in December 2018. It is the result of more than a year of consultation with philosophers, sociologists, lawyers and AI researchers as well as online and face-to-face surveys of the population. Its ten principles are intended to provide an ethical framework for the development and application of AI, serve as orientation in the digital transformation of society, and provide a basis for further debate. Continuous revisions of the Declaration are also planned for the future.